前些天帮别人优化PHP程序,搞得灰头土脸,最后黔驴技穷开启了FastCGI Cache,算是勉强应付过去了吧。不过FastCGI Cache不支持分布式缓存,当服务器很多的时候,冗余的浪费将非常严重,此外还有数据一致性问题,所以它只是一个粗线条的解决方案。

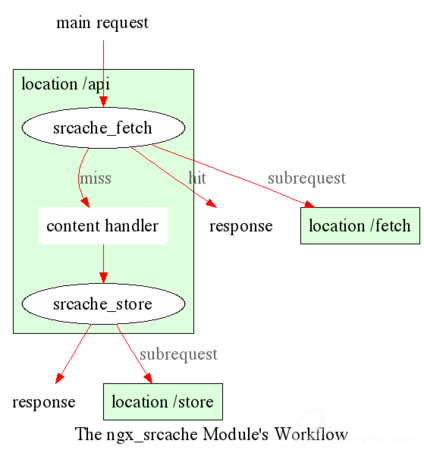

对此类问题而言,SRCache是一个细粒度的解决方案。其工作原理大致如下:

SRCache工作原理

当问题比较简单的时候,通常SRCache和Memc模块一起搭配使用。网上能搜索到一些相关的例子,大家可以参考,这里就不赘述了。当问题比较复杂的时候,比如说缓存键的动态计算等,就不得不写一点代码了,此时Lua模块是最佳选择。

闲言碎语不多讲,表一表Nginx配置文件长啥样:

lua_shared_dict phoenix_status 100m;

lua_package_path '/path/to/phoenix/include/?.lua;/path/to/phoenix/vendor/?.lua;;';

init_by_lua_file /path/to/phoenix/config.lua;

server {

listen 80;

server_name foo.com;

root /path/to/root;

index index.html index.htm index.php;

location / {

try_files $uri $uri/ /index.php$is_args$args;

}

location ~ \.php$ {

set $phoenix_key "";

set $phoenix_fetch_skip 1;

set $phoenix_store_skip 1;

rewrite_by_lua_file /path/to/phoenix/monitor.lua;

srcache_fetch_skip $phoenix_fetch_skip;

srcache_store_skip $phoenix_store_skip;

srcache_fetch GET /phoenix/content key=$phoenix_key;

srcache_store PUT /phoenix/content key=$phoenix_key;

add_header X-SRCache-Fetch-Status $srcache_fetch_status;

add_header X-SRCache-Store-Status $srcache_store_status;

try_files $uri =404;

include fastcgi.conf;

fastcgi_pass 127.0.0.1:9000;

fastcgi_intercept_errors on;

error_page 500 502 503 504 = /phoenix/failover;

}

location = /phoenix/content {

internal;

content_by_lua_file /path/to/phoenix/content.lua;

}

location = /phoenix/failover {

internal;

rewrite_by_lua_file /path/to/phoenix/failover.lua;

}

}

Nginx启动后,会载入config.lua中的配置信息。请求到达后,缺省情况下,SRCache为关闭状态,在monitor.lua中,会对当前请求进行正则匹配,一旦匹配成功,那么就会计算出缓存键,并且把SRCache设置为开启状态,最后由content.lua完成读写。

看看「config.lua」文件的内容,它主要用来记录一些全局的配置信息:

phoenix = {}

phoenix["memcached"] = {

default = {

timeout = "100",

keepalive = {idle = 10000, size = 100},

},

{host = "127.0.0.1", port = "11211"},

{host = "127.0.0.1", port = "11212"},

{host = "127.0.0.1", port = "11213"},

}

phoenix["rule"] = {

default = {

expire = 600,

min_uses = 0,

max_errors = 0,

query = {

["debug"] = false,

},

},

{

regex = "^/foo/bar",

query = {

["page"] = function(v)

if v == "" or v == nil then

return 1

end

return tonumber(v) or false

end,

["limit"] = true,

},

},

}

看看「monitor.lua」文件的内容,它主要用来计算缓存键,并开启SRCache模块:

local status = require "status"

local status = status:new(ngx.shared.phoenix_status)

local request_uri_without_args = ngx.re.sub(ngx.var.request_uri, "\\?.*", "")

table.unpack = table.unpack or unpack

for index, rule in ipairs(phoenix["rule"]) do

if type(rule["regex"]) == "string" then

rule["regex"] = {rule["regex"], ""}

end

local regex, options = table.unpack(rule["regex"])

if ngx.re.match(request_uri_without_args, regex, options) then

local default = phoenix["rule"]["default"]

local expire = rule["expire"] or default["expire"]

local min_uses = rule["min_uses"] or default["min_uses"]

local max_errors = rule["max_errors"] or default["max_errors"]

local key = {

ngx.var.request_method, " ",

ngx.var.scheme, "://",

ngx.var.host, request_uri_without_args,

}

rule["query"] = rule["query"] or {}

if default["query"] then

for key, value in pairs(default["query"]) do

if not rule["query"][key] then

rule["query"][key] = value

end

end

end

local query = {}

local args = ngx.req.get_uri_args()

for name, value in pairs(rule["query"]) do

if type(value) == "function" then

value = value(args[name])

end

if value == true then

value = args[name]

end

if value then

query[name] = value

elseif args[name] then

return

end

end

query = ngx.encode_args(query)

if query ~= "" then

key[#key + 1] = "?"

key[#key + 1] = query

end

key = table.concat(key)

key = ngx.md5(key)

ngx.var.phoenix_key = key

local now = ngx.time()

if ngx.var.arg_phoenix == true then

ngx.var.phoenix_fetch_skip = 0

else

for i = 0, 1 do

local errors = status:get_errors(index, now - i * 60)

if errors >= max_errors then

ngx.var.phoenix_fetch_skip = 0

break

end

end

end

local uses = status:incr_uses(key, 1)

if uses >= min_uses then

local timestamp = status:get_timestamp(key)

if now - timestamp >= expire then

ngx.var.phoenix_store_skip = 0

end

end

break

end

end

看看「content.lua」文件的内容,它主要通过Resty库来读写Memcached:

local memcached = require "resty.memcached"

local status = require "status"

local status = status:new(ngx.shared.phoenix_status)

local key = ngx.var.arg_key

local index = ngx.crc32_long(key) % #phoenix["memcached"] + 1

local config = phoenix["memcached"][index]

local default = phoenix["memcached"]["default"]

local host = config["host"] or default["host"]

local port = config["port"] or default["port"]

local timeout = config["timeout"] or default["timeout"]

local keepalive = config["keepalive"] or default["keepalive"]

local memc, err = memcached:new()

if not memc then

ngx.log(ngx.ERR, err)

ngx.exit(ngx.HTTP_SERVICE_UNAVAILABLE)

end

if timeout then

memc:set_timeout(timeout)

end

local ok, err = memc:connect(host, port)

if not ok then

ngx.log(ngx.ERR, err)

ngx.exit(ngx.HTTP_SERVICE_UNAVAILABLE)

end

local method = ngx.req.get_method()

if method == "GET" then

local res, flags, err = memc:get(key)

if err then

ngx.log(ngx.ERR, err)

ngx.exit(ngx.HTTP_SERVICE_UNAVAILABLE)

end

if res == nil and flags == nil and err == nil then

ngx.exit(ngx.HTTP_NOT_FOUND)

end

ngx.print(res)

elseif method == "PUT" then

local value = ngx.req.get_body_data()

local expire = ngx.var.arg_expire or 86400

local ok, err = memc:set(key, value, expire)

if not ok then

ngx.log(ngx.ERR, err)

ngx.exit(ngx.HTTP_SERVICE_UNAVAILABLE)

end

status:set_timestamp(key)

else

ngx.exit(ngx.HTTP_NOT_ALLOWED)

end

if type(keepalive) == "table" then

if keepalive["idle"] and keepalive["size"] then

memc:set_keepalive(keepalive["idle"], keepalive["size"])

end

end

看看「failover.lua」文件的内容,它是为了在出错时激活容灾模式:

ngx.req.set_uri_args(ngx.var.args .. "&phoenix") ngx.req.set_uri(ngx.var.uri, true)

此外,还有一个「status.lua」文件:

local status = {}

local get_timestamp_key = function(key)

key = {

"phoenix", "status", "timestamp", key,

}

return table.concat(key, ":")

end

local get_uses_key = function(key, timestamp)

key = {

"phoenix", "status", "uses", key, os.date("%Y%m%d%H%M", timestamp),

}

return table.concat(key, ":")

end

local get_errors_key = function(key, timestamp)

key = {

"phoenix", "status", "errors", key, os.date("%Y%m%d%H%M", timestamp),

}

return table.concat(key, ":")

end

local get = function(shared, key)

return shared:get(key)

end

local set = function(shared, key, value, expire)

return shared:set(key, value, expire or 86400)

end

local incr = function(shared, key, value, expire)

value = value or 1

local counter = shared:incr(key, value)

if not counter then

shared:add(key, 0, expire or 86400)

counter = shared:incr(key, value)

end

return counter

end

function status:new(shared)

return setmetatable({shared = shared}, {__index = self})

end

function status:get_timestamp(key)

return get(self.shared, get_timestamp_key(key)) or 0

end

function status:set_timestamp(key, value, expire)

key = get_timestamp_key(key)

value = value or ngx.time()

return set(self.shared, key, value, expire)

end

function status:get_uses(key, timestamp)

timestamp = timestamp or ngx.time()

key = get_uses_key(key, timestamp)

return get(self.shared, key) or 0

end

function status:incr_uses(key, value, expire)

key = get_uses_key(key, ngx.time())

value = value or 1

return incr(self.shared, key, value, expire)

end

function status:get_errors(key, timestamp)

timestamp = timestamp or ngx.time()

key = get_errors_key(key, timestamp)

return get(self.shared, key) or 0

end

function status:incr_errors(key, value, expire)

key = get_errors_key(key, ngx.time())

value = value or 1

return incr(self.shared, key, value, expire)

end

return status

最后一个问题:如何判断缓存是否生效了?试试下面的命令:

shell> curl -v "http://foo.com/test?x=123&y=abc" < X-SRCache-Fetch-Status: HIT < X-SRCache-Store-Status: BYPASS

目前我主要用srcache来缓存一些接口的json结果集,这些接口同时也支持jsonp,也就是客户端传递一个callback参数之类的,大家应该明白,此时如果不加区分的都缓存,那么有callback的和没有callback的调用结果就都要保存起来了,内存占用直接翻番,可实际上它们的内容大同小异,所以在实际应用时,我们应该仅仅缓存没有callback的数据,而对于有callback的请求,可以用xss-nginx-module来搞定。

关于激活SRCache前后的性能对比,视环境的不同会有所差异,不过绝对是数量级的提升,更重要的是这一切对业务层完全透明,别愣着了,快试试吧!

有C++难题,加我!

有C++难题,加我!